In this chapter of the Pytorch tutorial, we will be looking at an end-to-end example of performing training, validation, and testing of a Neural Network in Pytorch.

In this chapter, we will be training a Dense Feed Forward Neural Network on the MNIST dataset which is a collection of hand-written numbers ranging from 0-9. This example is supposed to demonstrate how to perform basic training, validation, and testing in Pytorch. For all real-life and practical purposes, there can be significant alterations to the methodology of training, validation, and testing as compared to this example.

Importing Libraries

It is important to import libraries that we will be using in this example.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib as mpl

import torch

import torchvision

from torch import nn

from torch.nn import functional as F

from torch.nn import CrossEntropyLoss

from torch.utils.data import Dataset, DataLoader, random_split

from torch.optim import SGDNote– We will be using the torchvision library just to load the dataset, so don’t feel intimidated.

Loading Dataset

Before we load the dataset, we need to define a transformation. A transformation is applied to the data before feeding it to the Neural Network. These transformations mostly consist of techniques used for data augmentation. In this example, we will not be using data augmentation. We will make use of transformation just to convert images to their tensor representations.

# Defining transformation to convert images to tensor

transform = torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])We will now load our training data from the torchvision datasets module.

train_data = torchvision.datasets.MNIST(root = '/train', train = True, download = True, transform = transform)Next, we load out testing data from the torchvision datasets module.

test_data = torchvision.datasets.MNIST(root = './test', train = False, download = True, transform = transform)Finally, we take out half of the test data as validation data for performing validation while training the model.

valid_data, test_data = random_split(test_data, [5000, 5000])Note– Notice that we have passed the transformation required when creating the training and test datasets. You can have different transformations applied to different datasets. Although, the transformations are not yet applied to the data. They will be applied when the data loaders will load the data into the Neural Network.

Creating Data Loaders

We now create data loaders to feed our datasets to the Neural Network. We create 3 separate data loaders, one each for training data, validation data, and test data. The batch size for each of these data loaders is 32.

train_dataloader = DataLoader(train_data, batch_size = 32)

valid_dataloader = DataLoader(valid_data, batch_size = 32)

test_dataloader = DataLoader(test_data, batch_size = 32)Creating Neural Network

We will now create a class for our Neural Network and define the architecture of the network and the flow of data through it.

class MyNet(nn.Module):

def __init__(self):

super(MyNet, self).__init__()

self.layer1=nn.Linear(784, 300)

self.layer2=nn.Linear(300, 100)

self.layer3=nn.Linear(100, 10)

def forward(self, x):

x=x.view(-1, 784)

x=F.relu(self.layer1(x))

x=F.relu(self.layer2(x))

return xNext, we will create out Neural Network by creating an instance of the MyNet class.

model = MyNet()Note– Notice that we are not using the softmax activation function on the final layer. This is because we will be using the Cross Entropy Loss for optimizing our Neural Network. And Cross Entropy Loss function in Pytorch will internally use the softmax activation function to compute losses. Therefore, applying the softmax activation function on the final layer will result in the softmax function being applied twice on the same output, which is undesirable.

Creating Optimizer and Loss Functions

We create a Stochastic Gradient Descent(SGD) optimizer to optimize the parameters of our model and set its learning rate to 0.001.

optimizer = SGD(model.parameters(), lr = 0.001)We also create a loss function to see how good or bad our model is performing at a given time.

loss_function = torch.nn.CrossEntropyLoss()Performing Training and Validation

We will now train the model on the training dataset, while simultaneously measuring how the model performs on the the validation dataset.

for epoch in range(10):

# Performing Training for each epoch

training_loss = 0.

model.train()

# The training loop

for batch in train_dataloader:

optimizer.zero_grad()

input, label = batch

output = model(input)

loss = loss_function(output, label)

loss.backward()

optimizer.step()

training_loss += loss.item()

# Performing Validation for each epoch

validation_loss = 0.

model.eval()

# The validation loop

for batch in valid_dataloader:

input, label = batch

output = model(input)

loss = loss_function(output, label)

validation_loss += loss.item()

# Calculating the average training and validation loss over epoch

training_loss_avg = training_loss/len(train_dataloader)

validation_loss_avg = validation_loss/len(valid_dataloader)

# Printing average training and average validation losses

print("Epoch: {}".format(epoch))

print("Training loss: {}".format(training_loss_avg))

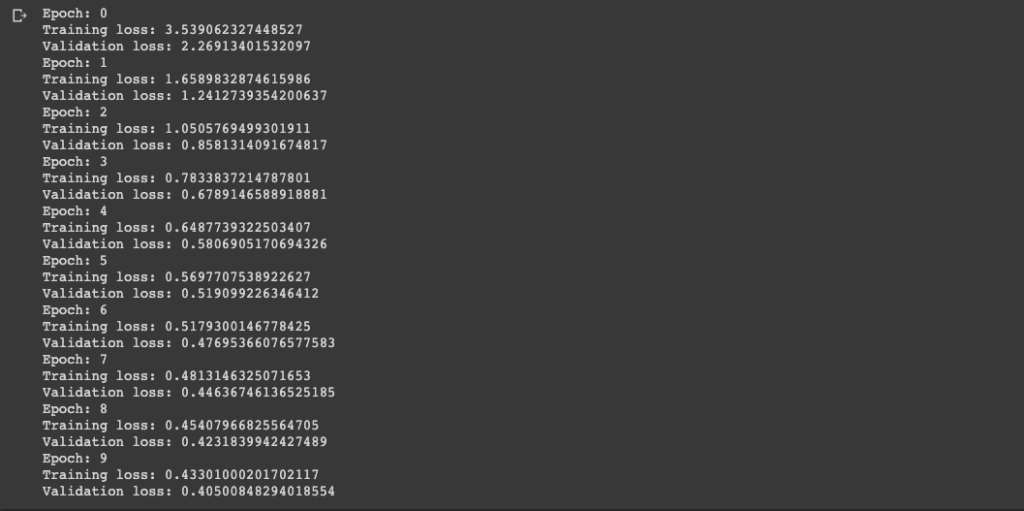

print("Validation loss: {}".format(validation_loss_avg))The image below shows training and validation losses over the respective datasets from epoch 0 to epoch 9. Notice the steady decline in the validation loss. Also, since the validation loss is still going down, you can train the model for a few more epochs.

Once the loss of your model on the validation dataset ceases to decrease, you may move to test the accuracy of your model on the test dataset that we created.

Testing Accuracy

We will now test the accuracy of the model on a test dataset.

# Setting the number of correct predictions to 0

num_correct_pred = 0

# Running the model over test dataset and calculating total correct predictions

for batch in test_dataloader:

input, label = batch

output = model(input)

_, predictions = torch.max(output.data, 1)

num_correct_pred += (predictions == label).sum().item()

# Calculating the accuracy of model on test dataset

accuracy = num_correct_pred/(len(test_dataloader)*test_dataloader.batch_size)

print(accuracy)Note– You can modify the training and validation loops to include accuracy along with the loss when training the model.

Making Predictions

We can make predictions by passing the inputs to the Neural Network and calculating the outputs.

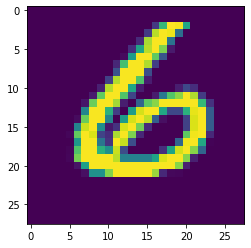

We will make a prediction on the second image of the test data itself.

# Making Prediction

prediction = model(test_data[1][0])The figure below is the second image of the test dataset. It looks clearly like a 6.

Let’s look at what our model predicts.

print(prediction.argmax().item())

# Outputs- 6The model predicts it a 6 correctly.

Result

The model that we have trained in this example gives an accuracy of 88.57% on the test dataset.

Hopefully, through this example, you have now learned how to train a model in Pytorch.