A Machine Learning model making accurate predictions during testing is one thing, but making the model work in the real world is a completely different challenge in itself. There are so many reasons that a Machine Learning model working fine in training will not do so, or will cease to do so after some time while in production. One of the big reasons for the same is Input Drift. In this short blog post, I will be talking about what is Input Drift, and explaining the same with an illustrative but somewhat contrived example.

What is Input Drift?

As the name suggests Input Drift refers to the drift in input. This drift in input occurs between the data on which the model was trained and the data on which the model is making predictions. The result of this is that there is a decrease in the accuracy of predictions made by the model. It is therefore important that the data on which the model is trained is similar to the data on which the model will be making predictions. However, in reality, the input data keeps on changing with time. And hence it becomes important to monitor this shift in input data and take appropriate actions to deal with the same.

An Illustrative Example

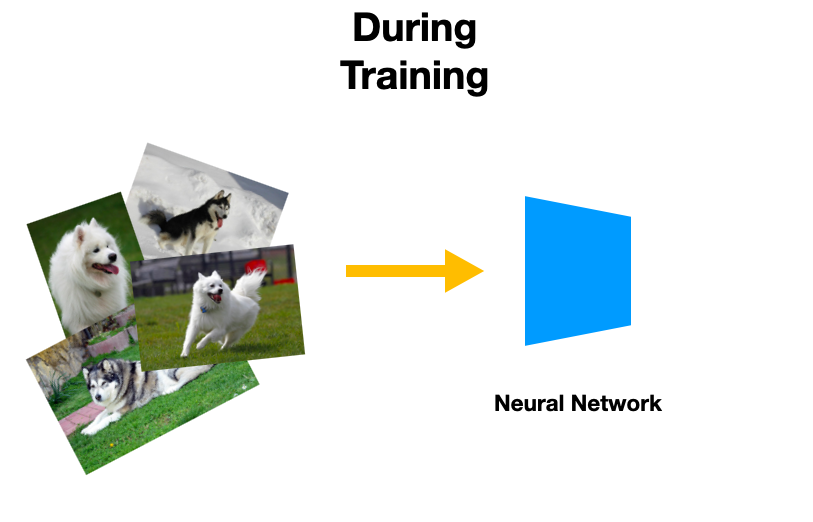

Let’s consider an example of Input Drift. Say we have trained a Neural Network to recognize from the photo of a dog, the breed of dog which is present in the image. The model is trained to recognize doge present in the polar regions such as Alaskan Malamutes, Huskies, Samoyed, Canadian Eskimo Dog, etc.

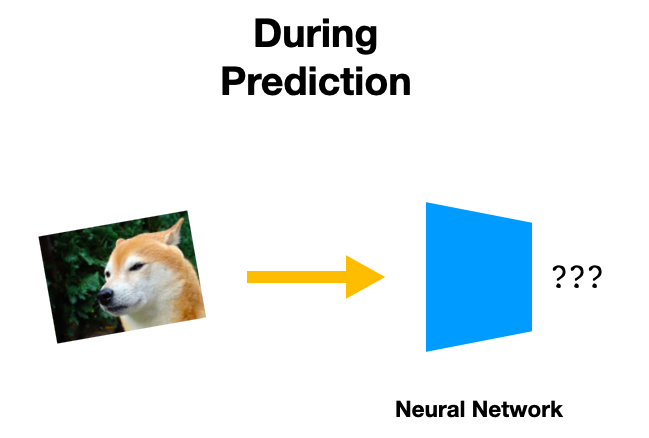

However, for some reason(say, due to more number of people watching memes) there is a shift in the input data during prediction. The input data along with the above-mentioned breed of polar dogs also contains images of the famous “meme dog” Shiba Inu. Since the model was trained on images of polar dogs, it will be able to recognize the images associated with those breeds of dogs. However, our model was never trained on the image of the Shiba Inu breed, it will not be able to do it effectively during inference.

This will result in lower accuracy of the model during inference as compared to that of the same model during training. And this drift in input data from training data to actual data on which predictions are to be made is called Input Drift.

Note– This is just an illustrative example to demonstrate the concept of Input Drift. Although, in this particular case, there would be no label corresponding to the new breed of dog, and hence, no correct answer. However, in practical cases, there can be a change in the representation of the data of the same class. In the context of the given example, a change in representation can be that during training, the images consisted of only the front-facing dogs. However, during inference, the images consist of dogs looking and standing in all directions. Hence, there is a drift in the input data as we go from training to predictions. And this can cause the accuracy of the model to falter.

Source of Images

- Alaskan Malamute- Image by ertuzio from Pixabay

- Siberian Husky- Image by forthdown from Pixabay

- Samoyed- Image by No-longer-here from Pixabay

- Eskimo- Image by carpenter844 from Pixabay

- Shiba Inu- Image by Petra Göschel from Pixabay

You can also check out my blog post on Self Supervised Learning, here.

I have also written a blog post on Imitation Learning, you can check it out here.