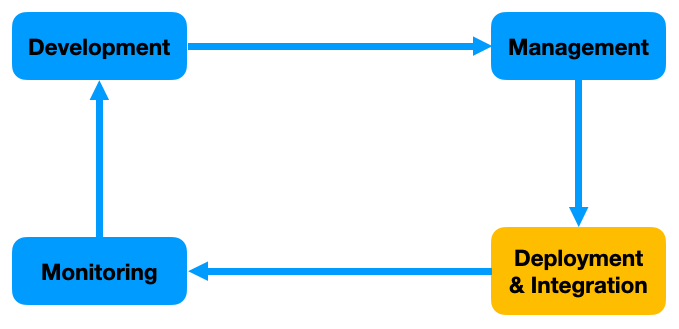

Machine Learning models need to be deployed to make them useful. The Deployment of the model is closely intertwined and dependent on its integration. In this chapter of the MLOps tutorial, you will learn about the Deploying Machine Learning models. In the next chapter, you will learn about integrating Machine Learning models.

Deployment is the process of converting the model to a form that can be executed in the production environment.

Optimizing the Model

There might be a need to optimize the existing model once it has been trained before it has been deployed. These modifications can include-

- The model is typically trained using 32-bit floating-point values. However, after training, the model can be modified to use 16-bit or 8-bit floating-point values. This method is known as Quantization and can be used for decreasing both, the memory requirements and inference time of the model in production. Learn More

- Use of Knowledge Distillation to train a smaller ‘student’ Neural Network by making use of the original Neural Network trained on the dataset. This approach is somewhat different from Noisy Student training. Learn More

- Another way to optimize the model is by pruning it. While pruning a Neural Network, certain parameters, or nodes are removed, thus making the Neural Network sparse. This helps in decreasing the memory requirements and inference time of the model. However, this is much more difficult to perform as compared to Quantization. Learn More

Considerations before Model Deployment

The development and training of the model are usually done using the Python programming language and other open-source python libraries in Jupyter Notebook or another Interactive Environment(such as IPython), or an IDEs(Integrated Development Environments). However, the environment for training the model is almost never the same as the production environment. For Example-

- The model might be trained on Jupyter Notebooks using Python, but the model is not going to be running in a Jupyter Notebook in the production environment. The model will be running on a server, an edge device(such as a mobile or an IOT device), or a container for example.

- When running in the production environment, the model might run on different hardware as compared to the hardware it was trained on. For example, the model might be trained using Tensor Processing Unit(s) or TPUs but might run on CPUs, GPUs, FPGA(Field Programmable Gate Arrays), etc.

- Additionally, the tools, frameworks, and runtimes being used in the production environment might be different as compared to those used by when training. This creates a need to convert the model to a suitable form before moving it to the production environment. This conversion needs to happen with the help of tools such as Open Neural Network Exchange, ONNX or Predictive Model Markup Language, PMML.

These are some of the many considerations taken before deploying the model. The actual processes and steps taken to deploy the model depend on the way in which the model will be integrated into the production system. You will learn about integration in the next chapter of the tutorial.