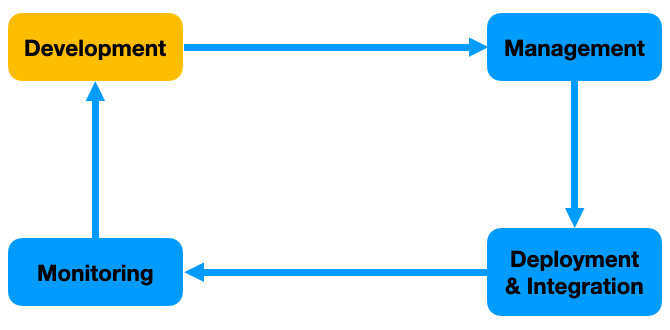

The life-cycle of Machine Learning models starts with the development of model. In this chapter of the MLOps tutorial, you will learn about the development phase of the Machine Learning models.

The development of phase itself includes many steps such as-

- Feature Engineering

- Preprocessing the data

- Training and Testing models

- Model Selection

Feature Engineering

Once the data has been analyzed, it is important to use the correct features of the data for training the model. In the field of Machine Learning, it is often said, “Garbage In, Garbage Out”. If irrelevant and unimportant features are used for training the model, the model will fail to learn the representations from the data well enough and hence will not perform well. This process of using appropriate features is called Feature Engineering and can be performed in 2 ways or their combination-

Feature Selection– A subset of the features of the original data are selected. For example, for a dataset having the set of features X1, X2, X3, X4; selecting features X1, and X4 for training the model.

Feature Extraction– New features are created from the existing set of features. For example, for a dataset having the set of features X1, X2; creating new features X1*X2, and X1/X2 for training the model. Dimension Reduction algorithms such as Principal Component Analysis(PCA) are feature extraction algorithms.

Note– Feature Selection and Feature Engineering are not required in Deep Learning, for the reason that useful representations are automatically learned from the features.

Preprocessing the Data

The next step is to pre-process the data. The pre-processing is done by the building and using pipelines. The data pre-process steps includes cleaning the data, imputing missing values, One-Hot Encoding of Categorical variables, Scaling features to within a certain range for continuous valued variables, etc. Separate pipelines are created for training, validation, and test datasets, for the reason that different transformations need to be applied to different datasets. For example, data augmentation and shuffling need to be done on training dataset but not on the validation and test datasets.

Training and Testing Models

State of the art algorithms are used for training the models. Various hyper-parameters are used for the algorithms to find the most optimal hyper-parameters. During the training phase, the performance of the model is measured on the validation set. The final hyper-parameters are chosen based on the performance of various models on the Validation Dataset. However, a separate dataset is used to check the performance of the model in real-world. Unlike the validation dataset, no hyper-parameter changes are made based on the performance of model on test dataset. In special cases, such as lack of data, techniques such as k-fold cross validation is used.

Model Selection

Once various models are trained, their performance of the test dataset is compared by use of metrics such as Accuracy, Error Rate, Precision, Recall, F1 Score, AUC etc for classification problems and metrics such as Mean Squared Error, Mean Absolute Error, Huber loss, etc for regression problems. It is important to note that while performance of a model is an important fact but the best performing model aren’t always chosen for actual use in production, there are a host of other factors that impact the decision, such as latency(time required for making each prediction) and hardware for running model(edge devices, cloud, etc). Once all the various factors have been considered, the model that is to be productionized is finalized.