In this chapter of Pytorch Tutorial, you will learn about broadcasting and how to broadcast tensors in Pytorch.

Broadcasting functionality in Pytorch has been borrowed from Numpy. Broadcasting allows the performing of arithmetic operations on tensors that are not of the same size. Pytorch automatically does the broadcasting of the ‘smaller’ tensor to the size of the ‘larger’ tensor, only if certain constraints are met.

Example

This example demonstrates the addition operation between tensors that are not of the same size.

tensor = torch.tensor([1, 2, 3, 4]) + torch.tensor([1, 2, 3])

# Outputs- RuntimeError: The size of tensor a (3) must match the size of tensor b (2) at non-singleton dimension 0The addition operation is not possible in this case due to the different sizes of the tensors.

Example

This example also demonstrates an addition operation between tensors that are not of the same size.

tensor = torch.tensor([1, 2, 3])+torch.tensor([1])

print(tensor)

# Outputs- tensor([2, 3, 4])Note– The value 1 is added to all the elements in the first tensor.

However, unlike the previous example, the addition operation was successful in this example. This happened because the smaller tensor was broadcasted to the size of the larger tensor.

How does Broadcasting happen in Pytorch?

Consider the addition of the two tensors in the above example

[1, 2, 3] + [1]

Since the two tensors are not of the same size, Pytorch tries to broadcast the smaller one to the size of the larger one. It can easily do so by repeating the same value 3 times. The addition of these two tensors will be similar to the following addition

[1, 2, 3] + [1, 1, 1]

This ‘stretching’ of the smaller tensor across one or many dimensions is what is known as ‘broadcasting’ of the tensor.

Note– Pytorch does not actually ‘stretch’ the smaller array to the size of the larger one to perform an arithmetic operation. It just uses loops to perform the broadcasting operation, therefore, creating an illusion of stretching/ resizing. The analogy of stretching is just to get a better understanding of the topic.

Rules for Broadcasting

Two tensors are compatible for broadcasting only if, when starting from the trailing dimensions of the tensors:

- If the dimensions of both the tensors are the same.

- If the dimension of one of the tensors is 1.

The following example demonstrates the addition of tensors involving broadcasting.

Example

tensor1 = torch.tensor([[1, 2], [0, 3]])

tensor2 = torch.tensor([[3, 1]])

tensor3 = torch.tensor([[5], [2]])

tensor4 = torch.tensor([7])

print(tensor1.shape)

# Outputs- torch.Size([2, 2])

print(tensor2.shape)

# Outputs- torch.Size([1, 2])

print(tensor3.shape)

# Outputs- torch.Size([2, 1])

print(tensor4.shape)

# Outputs- torch.Size([1])

print(tensor1 + tensor2)

# Outputs- tensor([[4, 3], [3, 4]])

print(tensor1 + tensor3)

# Outputs- tensor([[6, 7], [2, 5]])

print(tensor2 + tensor3)

# Outputs- tensor([[8, 6], [5, 3]])

print(tensor1 + tensor4)

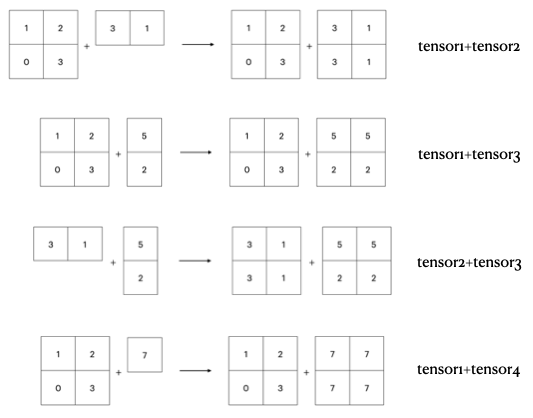

# Outputs- tensor([[ 8, 9], [ 7, 10]])The figure below depicts how tensors appear to ‘stretch’ or ‘resize’ during broadcasting. Notice how the smaller tensor appears to ‘stretch’ to the size of the larger tensor. The smaller tensor appears to repeat to match the size of the larger tensor.

The third example shows how broadcasting works for tensors of the same sizes but different shapes. Notice the pattern in which the tensors appear to repeat themselves such that they have the same shape and size after broadcasting.

Note– In this chapter, the concept of broadcasting has been demonstrated only using the addition operation on tensors. Broadcasting can however be performed on tensors while performing any arithmetic operation.