Rectified Linear Unit, otherwise known as ReLU is an activation function used in neural networks. An activation function is the one which decides the output of the neuron in a neural network based on the input. The activation function is applied to the weighted sum of all the inputs and the bias term.

It is vastly used in Neural Networks because of its speed and ease of calculation and it generally performs better than other traditional activation functions such as Sigmoid and Hyperbolic-Tangent functions.

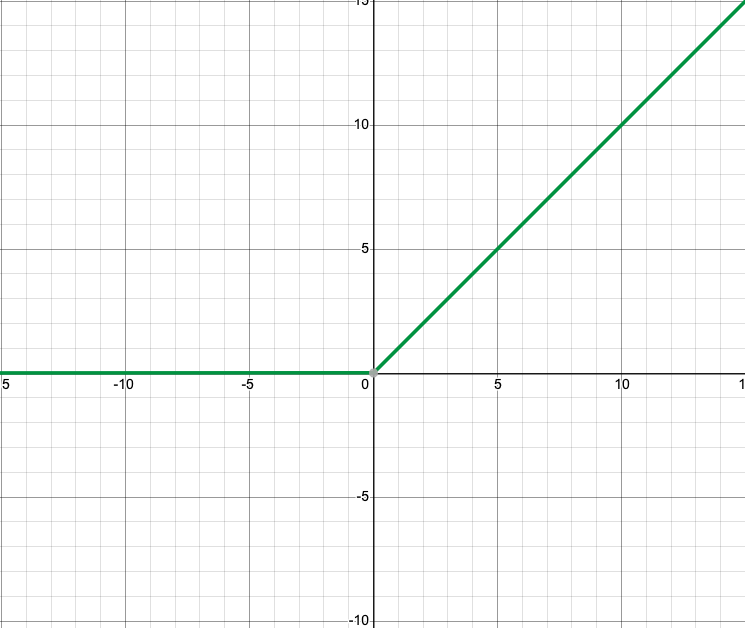

ReLU produces an output which is maximum among 0 and x. So when x is negative, the output is 0 and when x is positive, the output is x. Mathematically it can be written as-

y=ReLU(x)=max(0,x)

The derivative of this function is 0 for all values of x less than 0 and 1 for all values of x greater than 0. At 0 however, the derivative of this function does not exist.

Fig. 1- ReLU Activation function

Pros

- Performs better as compared to traditionally used activation functions such as Sigmoid and Hyperbolic-Tangent functions.

- Is fast and easy to calculate. The same applies for its derivative which is used during Backpropagation.

- It does not saturate for positive values of input and hence does not run into problems related to exploding/vanishing gradients during Gradient Descent.

Cons

- Though the function is continuous at all points, it is not differentiable at the point x=0, i.e, at the point x=0, the slope of the graph changes abruptly as can be seen in the graph. Due to this, during the gradient descent its value will ‘bounce around’. Despite this fact, ReLU works very well in practice.

- It suffers from the problem of dying ReLU’s. To solve this problem we can use variants of ReLU such as Leaky-ReLU.

ReLU is often the default activation function for hidden units in Dense ANN(Artificial Neural Networks) and CNN(Convolutional Neural Networks). Also, when using ReLU as an activation function, use of He initialization is usually preffered.