We have already discussed about how we can build a Linear Regression model using Numpy by calculating the solutions of the Normal Equation in the last post. In this post we will be discussing about how we can train a Linear Regression model by using Tensorflow to calculate the solutions of the Normal Equation. Tensorflow is an open source library by Google which can be used for various tasks including Machine Learning and Deep Learning. It is a very popular library for training Machine Learning and Deep Learning Models. If you have been involved in Machine Learning in any form then you would have obviously heard about Tensorflow. In this blog post we will train the model on an artificially created dataset with just a single feature for the sake of simplicity.

We will start this blog post with a summary of what is Normal Equation. Though I have explained about the normal equation in the previous post, I will do it again in brief in this post too. Next we will jump to how to implement Linear Regression model in Tensorflow. This part will include the coding part along with an explanation of the code. This part will be further subdivided into three parts. The first part deals with the creation of a dataset. The second part deals with the training the model and calculating the value of θ vector. In the third part we will make predictions over the test set. This post is deliberately made similar to the last one so that you can compare the differences between Numpy and Tensorflow. Also, the dataset used will be similar to the last post.

The link to the Jupyter Notebook will also be made available at the end of the post.

Normal Equation

The value of weights for the linear regression can be calculated simply by using the normal equation. Mathematically, The Normal Equation can be written as-

θ=(X⊺X)−1X⊺y

The value of θ computed from this equation will minimise the sum of squared error over the entire training dataset and is hence the most optimal value of θ.

Code

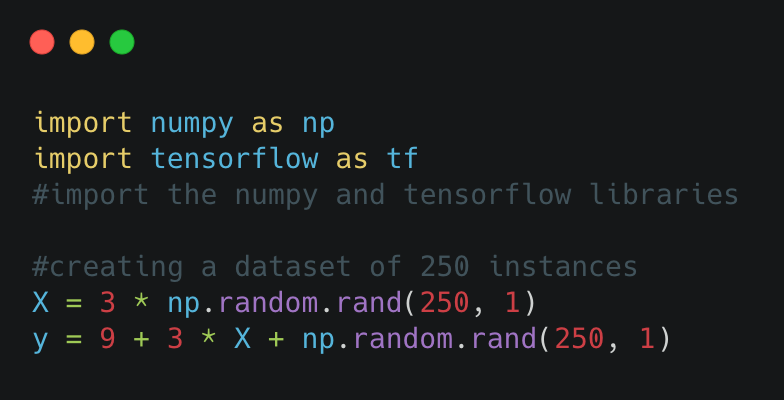

Creating an Artificial Dataset

We created an artificial dataset with only one feature. The dataset can be considered to be created from the line 9 + 3x along with some random gaussian noise. We then store the values of feature in the variable X and their corresponding target values in variable y.

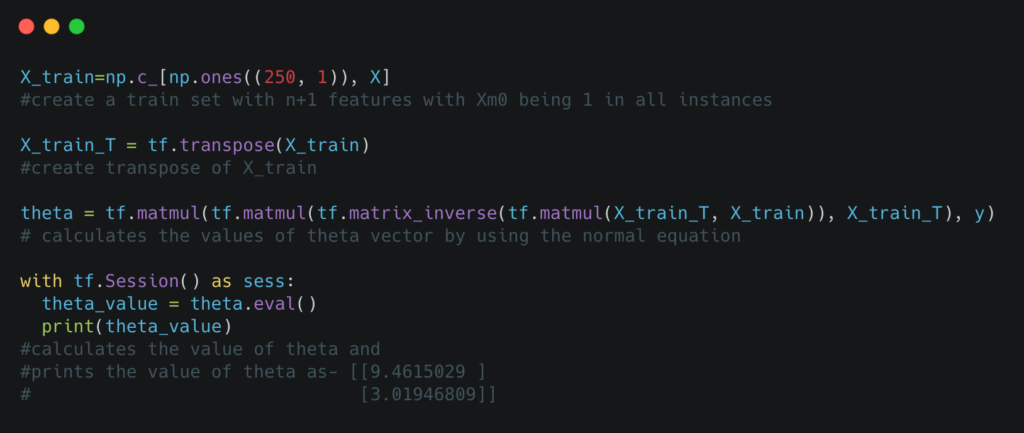

Finding the value of θ Vector

Next we create a training set by adding a column of data in original training set. This additional column consists of all 1’s. We create an additional variable X_train_T to denote the transpose of X_train. This is done simply for the sake of simplicity. We then find the value of theta by using the normal equation. Next we start a tensorflow session to evaluate the value of theta that minimises the mean squared error and print its value. If you are not from a tensorflow background, you probably wouldn’t have understood this step fully. For now just understand that creation of a session is done to calculate the value of θ vector, or any calculation in general.

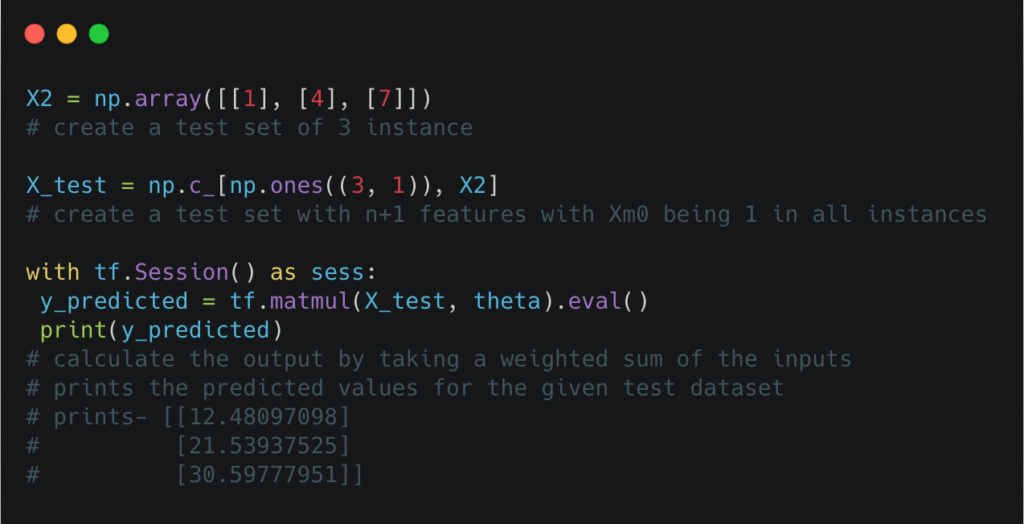

Predicting Output over Test Set

Since now we have calculated the values of θ that will minimise the Mean Squared Error(MSE). We can now predict the values for new instances and we do so by computing the weighted sum of features on a new test set. We created a new test set with 3 instances, i.e, 1, 4, and 7. Similar to what we did earlier with the training set, we add a column of data in the original data we created. Similarly as before, this additional column of data also contains all 1’s. Next, we predict the values for the test instances and store it in the variable y_predicted, and print the predicted values. Similar to the last step, we calculate the output after starting a tensorflow session.

Conclusion

In this blog post, how to train linear regression model using tensorflow, we first created an artificial dataset. The artificial dataset contains a single feature for the sake of simplicity. Then using the Normal equation, we calculated value of the weight vector θ. Next we created an artificial test dataset and calculated the predicted outputs by the model by calculating a weighted sum of the inputs. In short, we created a model for Linear Regression using Tensorflow. This post is quite similar to the last post, and it was intentionally so, so that you can compare how to implement the normal equation in tensorflow and numpy. For the sake of simplicity, the dataset too, like the earlier post contains only a single feature. I hope you can now train your own linear regression model in tensorflow.

You can find the code for this blog on Github here.