In recent years there has been a significant rise in interest in Self-Supervised Learning(SSL). The main reason behind this is its potential to advance Machine Learning. Supervised Learning, since its inception, has suffered from the lack of availability of annotated data and the huge costs associated with annotating new data. It is exactly this drawback of Supervised Learning that Self-Supervised Learning aims to improve at. Self-Supervised Learning, as the name suggests, uses a semi-automated process without any human involvement to generate labels for the data. Pretext Training is task or training that are assigned to a Machine Learning model prior to its actual training. In this blog post, we will talk about what exactly is Pretext Training, and how it is helpful for Self-Supervised Learning.

Pretext Training refers to training a model for a task other than what it will actually be trained and used for. This Pretext Training is done prior to actual training of the model. For this reason, Pretext Training is sometimes also known as Pre-Training.

Now, this might seem counter-intuitive at first. Why would you even want to train your model on something that it will never be used for?

Supervision is the opium of the AI researcher.

Jitendra Malik

As it turns out, training the model on a task that is related to the actual task can help the model to learn some meaningful representations and features about the data. Let’s consider an example.

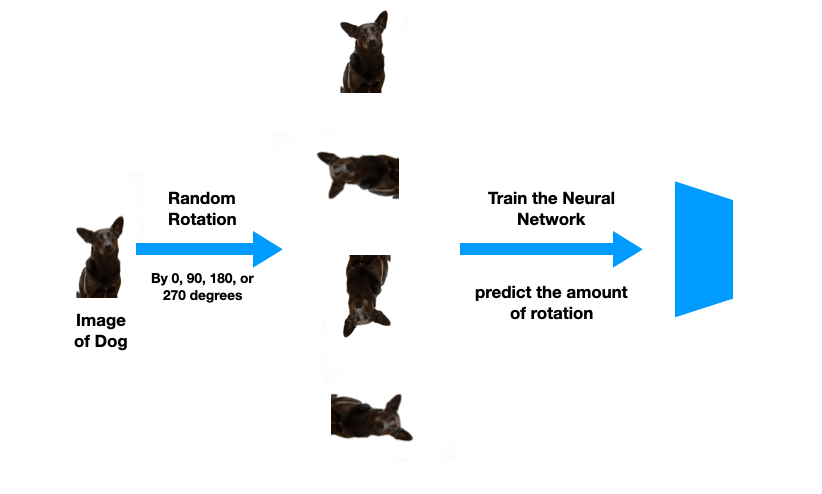

Say you want to train a Neural Network to detect the breed of a dog, given an image of it. A suitable pretext task for the same can be to obtain various images of dogs, rotate them randomly by 0, 90, 180, or 270 degrees. And have a Neural Network predict the amount of rotation of the image.

While we do not want the Neural Network to be used to predict the rotation for dog images. However, training it to predict the rotation of an image of dogs will help the Neural Network to learn some meaningful representations and features from a dog’s image. For example, it will learn to recognize where the eyes, ear, and nose appear and consecutively deduce by their orientation the amount of rotation of the image.

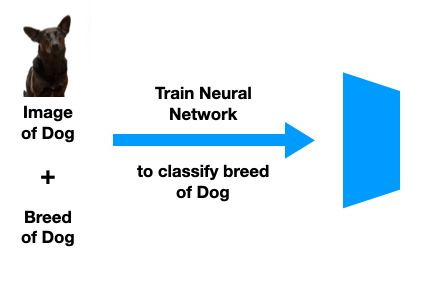

Once the Neural Network is done with pretext training, we take the Neural Network and train(or fine-tune) it to classify the breed of a dog.

After learning these representations, it will be easier for the Neural Network to train and classify the breed of a dog from an image because it already ‘knows’ about what dogs look like. Whereas, the Neural Network would have known nothing about dogs were we to train it from scratch.

The biggest advantage of pretext training is that it helps you to train a Neural Network with a limited amount of data. Notice that you do not need to have a label(the dog’s breed) for the dog’s image to train the network on the pretext task. You only need to have labelled data for the actual training. Therefore, the amount of labelled data that you are using for training the data is much less. And since there is no shortage of unlabelled data on the internet, it is very beneficial to perform pretext training prior to the actual training of the model.

Due to the order in which these two tasks- the pretext training, and the actual training, are performed, sometimes they are colloquially also known as upstream task and downstream tasks respectively. You can also have several pretext tasks prior to the actual training. These tasks usually go in the order from the easiest to the most difficult.

Although in this blog, we have only talked about Image Rotation for Pretext Training, there are several other ways to perform pretext training that allow you to train your model with a limited amount of labelled data. I will be talking more about these in a future blog post.

References

Learn more about Pretext Training for Self-Supervised Learning, here.

Learn more about the Learning Representations by predicting rotated images, here.

You may also be interested in my blog post about Imitation Learning, here.