Sigmoid function also known as logistic function is one of the activation functions used in the neural network. An activation function is the one which decides the output of the neuron in a neural network based on the input. The activation function is applied to the weighted sum of all the inputs and the bias term. The traditional step or sign function used for training a perceptron cannot be used to train a neural network as it is not continuous and does not offer any gradient to work with- a basic requirement for training the neural networks through backpropagation algorithm. If a linear activation function is used, then multiple layers of such a neural network will be able to represent only a linear function irrespective of how many layers are present in the neural network. To represent a complex highly non-linear function which is desirable for accomplishing complex tasks we need to have a non-linear activation function which is continuous and differentiable.

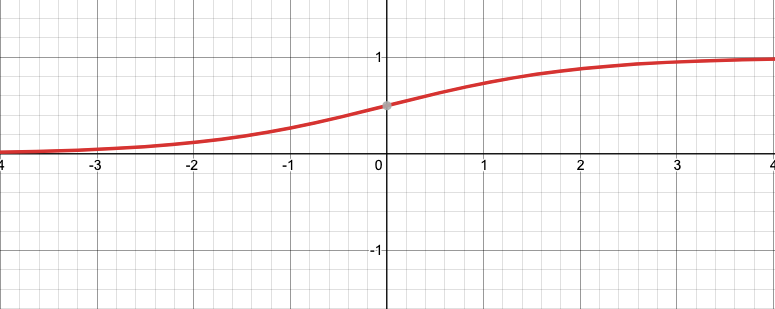

Sigmoid activation function is continuous and differentiable at all points and always produces an output between the number 0 and 1. It has a S- shaped graph as shown in the figure below. It is defined as-

y=1/(1+e^(-x))

The derivative of the sigmoid function is-

y’=y(1-y)

It is very easy to calculate the derivative of the function from the value of the function during the reverse pass of the backpropagation algorithm. The biological neurons in the brain have a roughly sigmoid shaped activation function causing many people to believe that using sigmoid activation functions must be the best way to train a neural network. For this reason they had been used extensively to train neural networks until the supremacy of non-saturating activation functions was discovered in practical cases.

Pros

- It is continuous and has a well defined gradient at all points.

- It is a non-linear activation function.

- Since the output is always between 0 and 1, it can be used as the activation function for the output neuron where probability is to be predicted.

- The derivative is easy to calculate which is required during the reverse pass of the backpropagation algorithm.

Cons

- It can cause the neural network to get stuck during training time.

- It can lead to the problem of exploding gradient or vanishing gradient. Due to this reason non-saturating activation function perform better in the practical case.