Backpropagation has been the gold standard for updating the weights of Neural Networks and optimizing them since, well forever. Google has recently introduced a new framework, which they call LocoProp for optimizing deep neural networks more efficiently. LocoProp re-conceives Neural Networks as a Modular Composition of Layers and decomposes Neural Networks into separate layers with their own regularizer, output target, and loss function and applies local updates in parallel to minimize the local objectives.

LocoProp aims to be an improvement over the Conventional weight update algorithms which take consider the entire Neural Network as a single structure. Training Deep Neural Networks involves minimizing the loss function and updating the weights of the units(or neurons) by backpropagation, which updates the model weights via gradient descent. Gradient descent updates the weights by using the gradient (or derivative) of the loss with respect to the weights.

In LocoProp, each layer is allotted its own weight regularizer, output target, and loss function. The loss function of each layer is designed to match the layer’s activation function. By using this formulation, training minimizes the local losses for a given mini-batch of examples, iteratively and in parallel across layers.

Introducing LocoProp, a new framework that reconceives a neural network as a modular composition of layers—each of which is trained with its own weight regularizer, target output and loss function—yielding both high performance and efficiency. Read more → https://t.co/yjxOf8uKS9 pic.twitter.com/l5mB1e5M1a

— Google AI (@GoogleAI) July 29, 2022

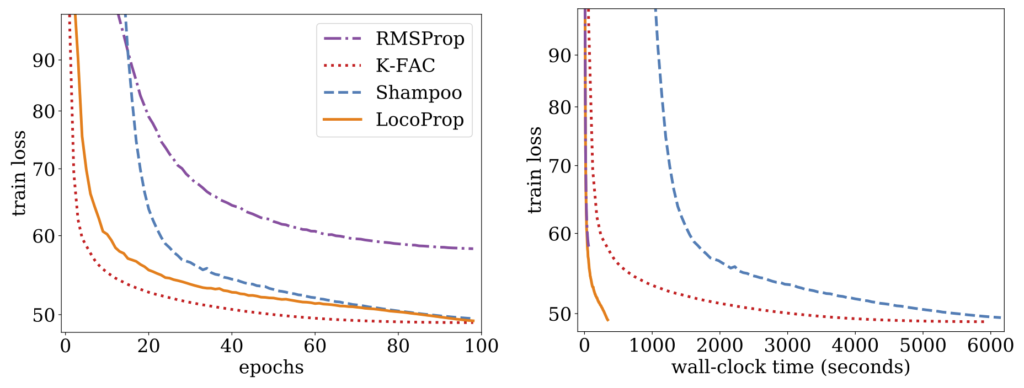

Upon experimenting with LocoProp on a Deep Auto-Encoder Model, findings indicate that the LocoProp method performs significantly better than first-order optimizers and is comparable to those of higher-order, while being significantly faster when run on a single GPU.

LocoProp provides flexibility to choose the layerwise regularizers, targets, and loss functions. Thus, it allows the development of new update rules based on these choices. Work is under progress for extending LocoProp to larger scale models.

Check out LocoProp on Github, here or read more about it, here.

Other Articles you might be Interested In

Ian Goodfellow, the inventor of Generative Adversarial Networks moves to DeepMind

First Edition of IEEE Conference on Secure and Trustworthy Machine Learning scheduled for 2023

IBM Announces the Qiskit Quantum Explorers Program

Microsoft Research announces AI4Science to tackle Societal Challenges

Alphabet spins out SandBox AQ, an AI and Quantum Computing Company