Noisy Student is a semi-supervised learning approach that extends the idea of self-training and knowledge distillation. It was proposed by researchers at Google and Carnegie Mellon University(CMU). The paper came out in June 2020 and can be found over here. In this blog post, we will be covering, in brief, the algorithm of Noisy Student training, and the results achieved on the ImageNet dataset by an EfficientNet trained used this methodology.

In this blog, we will be taking the example of image datasets, just like it was done in the original research. The rest of the blog is organized as follows. The first part talks about the algorithm for training a noisy student. The second part talks about the results achieved on the ImageNet dataset. The last part is the conclusion and talks about what we learned in this blog.

Algorithm

In this section, we discuss the algorithm for training with noisy student. The noisy student training algorithm consists of 3 main steps; training a teacher model, using the teacher model to generate pseudo-labels for un-labeled images, and, training the student model on labeled and un-labeled images. We will discuss each of these three steps in detail below.

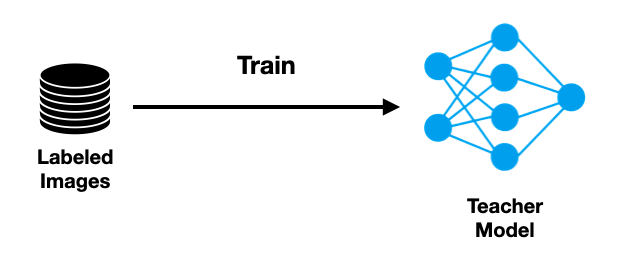

Training Teacher Model

We start by training a model on the labeled images. This step is similar to how we would train any other model on the data. This model is called the teacher model.

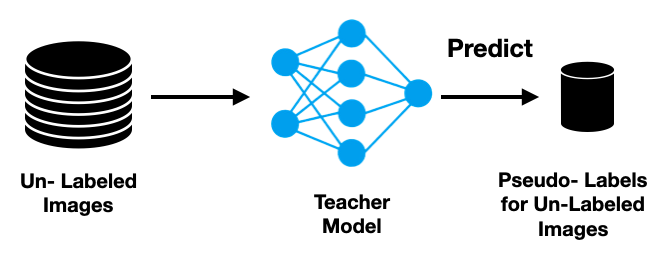

Generating Pseudo-Labels using Teacher Model

We use the teacher model we trained on the labeled images to generate pseudo-labels for the un-labeled images. These labels are called pseudo-labels because the label predicted by the teacher model might or might not be the correct label for the image. While these pseudo-labels might not be always correct, they are correct most of the time because it is predicted by the teacher model and not guessed randomly. This step is important because it takes advantage of the huge corpus of un-labeled images that is available. In comparison, labeled images are available in much smaller quantities because of the cost associated with labeling them.

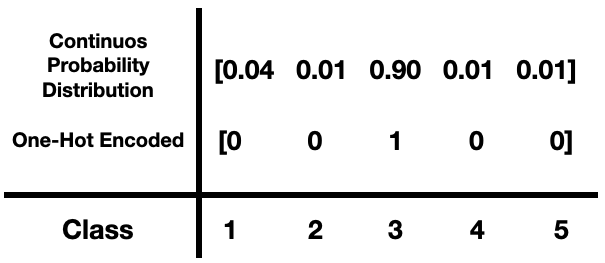

It should also be noted that while generating pseudo-labels, no noise is added to either the model(by using stochastic depth, for example) or the data(by using data augmentation, for example). The pseudo-labels can be one hot encoded(also known as hard labels) or can be a continuous distribution of probability(also known as soft labels).

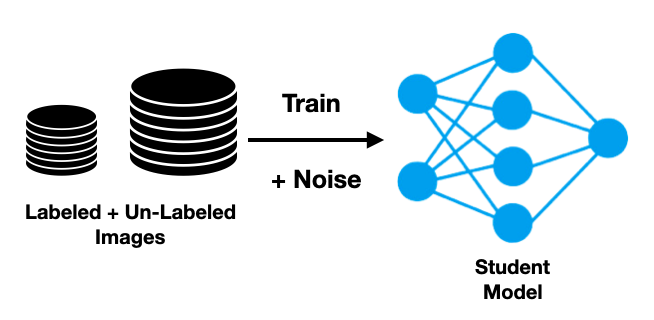

Train Student model on Labeled and un-Labeled images

We now build a new model that is larger than or equal to the teacher model, this model is called the Student model. This student model is trained on a combination of labeled and un-labeled images. Noise is introduced into the student model during training. While the noise in the data is added using data augmentation, the noise in the model is introduced using stochastic depth and dropout. It is the addition of this noise that makes the student model generalize better than the teacher model, hence improving the accuracy and robustness of the model.

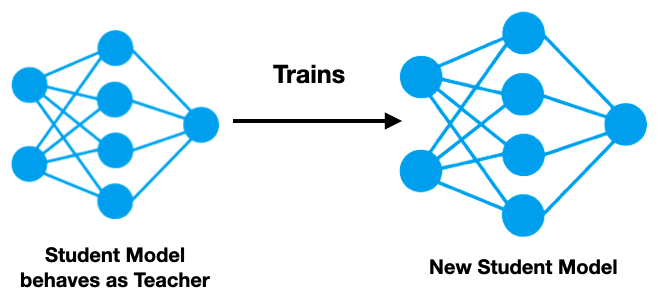

The Student becomes the Teacher

The student model now becomes a teacher model and labels the un-labeled images with pseudo-labels. Since the accuracy of this teacher model is higher than that of the previous teacher model, it will generate more accurate pseudo-labels. We now create another student model, which is larger than or equal to the teacher model. The new student model is trained similar to how we trained the previous student model. This process is iterated a couple of times.

Results obtained on ImageNet

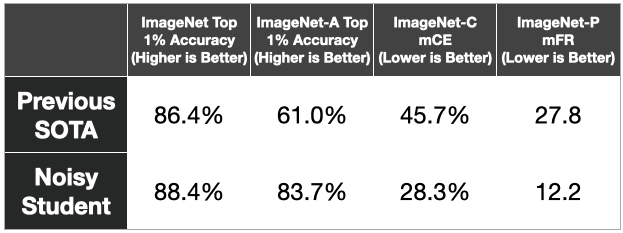

In the original research, a total of 300 million un-labeled images were used for training along with the labeled images. Training an EfficientNet model with noisy student on this data improved the ImageNet top-1 accuracy to 88.4%. This is 2% higher than the previous state of the art models. The accuracy on ImageNet-A, a subset of ImageNet containing difficult to classify images, is also improved by a large margin. The mean corruption error(mCE) on ImageNet-C and mean flip rate(mFR) on ImageNet-P were also reduced considerably by training with noisy student algorithm. These results demonstrate the robustness and generalization capability of models trained using noisy student. The table below compares previous state of the art models against an EfficientNet model trained using noisy student on various datasets.

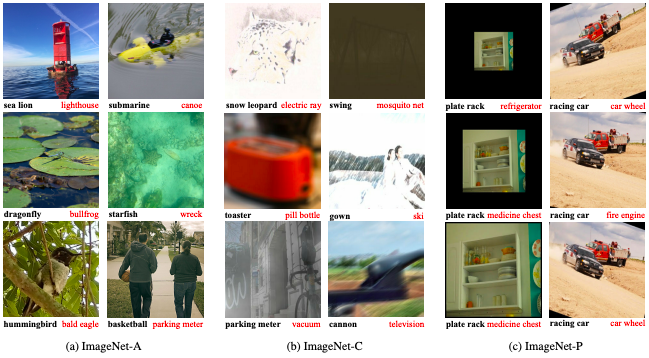

The figure below contains a few images that were incorrectly classified by previous state of the art models, but are classified correctly by the EfficientNet model trained with Noisy Student.

Conclusion

In this blog post, we discussed in detail how the student model is trained. How the addition of noise helps to the model and data helps in improving the robustness and accuracy of the model. We also discussed the results obtained by training EfficientNet using the Noisy Student algorithm on the ImageNet dataset.